In simple words: what is mining and where did all the video cards go

You probably heard from the news that all video cards have disappeared from sale. You even found out from there who bought everything – the miners. They “mine” cryptocurrency on their “farms”. I am sure that you have heard about the most famous cryptocurrency – Bitcoin.

But I also believe that you don’t really understand why it started right now, what exactly this mining is about, and why there is so much noise around some strange “electronic candy wrappers” in general. Maybe if everyone is engaged in mining, then you should too? Let’s get to the bottom of what’s going on.

Blockchain

Let’s start with a bit of bitcoin and blockchain basics. You can read more about this in our other article, and here I will write very briefly.

Bitcoin is decentralized virtual money. That is, there is no central authority, no one trusts anyone, but nevertheless, payments can be safely organized. Blockchain helps with this.

“Blockchain technology, in my opinion, is the new internet. It’s an idea on the same level as the internet.“

— Herman Gref

Blockchain is such an Internet diary. Blockchain is a sequential chain of blocks, each of which contains transactions: who transferred how many bitcoins and to whom. In English, it is also called ledger – literally “ledger”. Actually, the ledger is – but with a couple of important features.

The first key feature of the blockchain is that all full-fledged participants in the Bitcoin network store the entire block chain with all transactions for all time. And they constantly add new blocks to the end. I repeat, the entire blockchain is stored by each user in its entirety – and it is exactly the same as that of all other participants.

The second key point: the blockchain is based on cryptography (hence the “crypto” in the word cryptocurrency). The correct operation of the system is guaranteed by mathematics, and not by the reputation of any person or organization.

Those who create new blocks are called miners. As a reward for each new block, its creator now receives 12.5 bitcoins. At the exchange rate as of July 1, 2017, this is approximately $30,000. A little later, we will talk about this in more detail.

By the way, block rewards are the only way to issue bitcoin. That is, all new bitcoins are created with the help of mining.

A new block is created only once every 10 minutes. There are two reasons for this.

Firstly, this was done for stable synchronization – in order to have time to distribute the block throughout the Internet in 10 minutes. If blocks were created continuously by everyone, then the Internet would be filled with different versions, and it would be difficult to understand which of these versions everyone should eventually add to the end of the blockchain.

Secondly, these 10 minutes are spent on making the new block “beautiful” from a mathematical point of view. Only the correct and only beautiful block is added to the end of the blockchain diary.

Why blocks should be “beautiful”

The correct block means that everything is correct in it, everything is according to the rules. The basic rule: the one who transfers the money really has that much money.

And a beautiful block is one whose convolution has many zeros at the beginning. You can again remember more about what a convolution (or “hash” is the result of some mathematical transformation of a block) is from here. But for us now it is completely unprincipled. The important thing is that to get a beautiful block, you need to “shake” it. “Shake” means to slightly change the block – and then check if it suddenly became beautiful.

Each miner continuously “shakes” candidate blocks and hopes that he will be the first one to “shake” a beautiful block, which will be included at the end of the blockchain, which means that this particular miner will receive a reward of $30,000.

At the same time, if suddenly there are ten times more miners, then the blockchain will automatically require that in order to recognize a new block as worthy of being written to the blockchain, it must now be ten times “beautiful”. Thus, the rate of appearance of new blocks will be preserved – one block will appear every 10 minutes anyway. But the probability of a particular miner to receive a reward will decrease by 10 times.

Now we are ready to answer the question why blocks should be beautiful. This is done so that some conditional Vasya cannot take and simply rewrite the entire history of transactions.

Vasya will not be able to say: “No, I did not send Misha 10 bitcoins, in my version of the story there is no such thing – believe me.” Indeed, in this fake version of the story, the blocks must be beautiful, and as we know, in order to shake at least one such block, it is necessary that all the miners work for 10 minutes, which Vasya alone can handle.

Miners

The concept is clear, now let’s take a closer look at the miners.

In 2009, when only enthusiasts (or rather, even only its creators) knew about Bitcoin and it cost five cents apiece, it was easy to mine. There were few miners, say, a hundred. This means that, on average, per day, the conditional miner Innokenty at least once had the luck to shake a block and receive a reward.

By 2013, when the price of Bitcoin rose to hundreds of dollars apiece, there were already so many enthusiastic miners that it would take months to wait for luck. Miners began to unite in “pools”. These are cartels that shake the same block candidate all together, and then share the reward for everyone fairly (in proportion to the effort expended).

Then there were special devices – ASIC. These are microcircuits that are designed specifically to perform a specific task. In this case, ASICs are narrowly focused on shaking Bitcoin blocks as efficiently as possible.

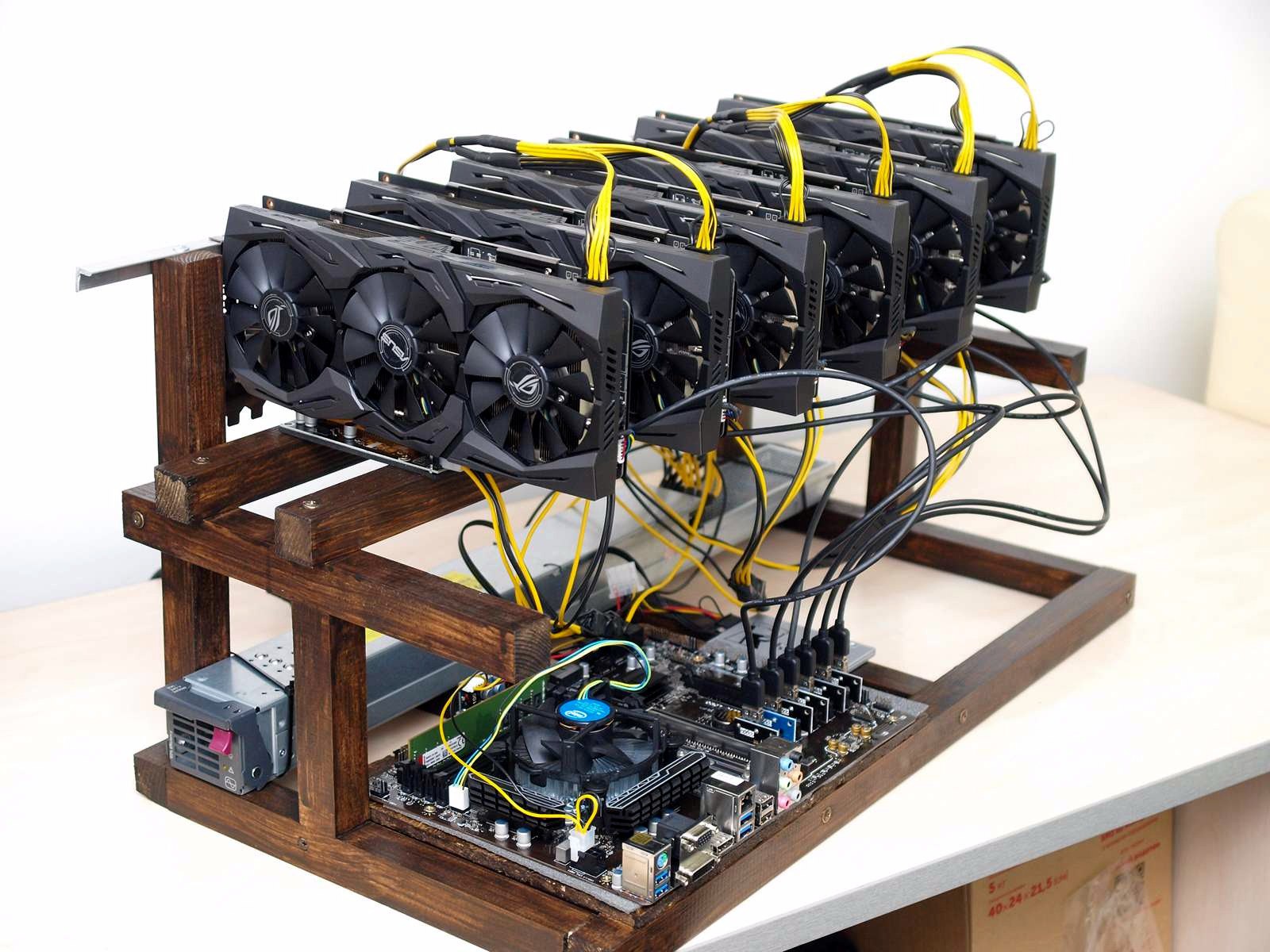

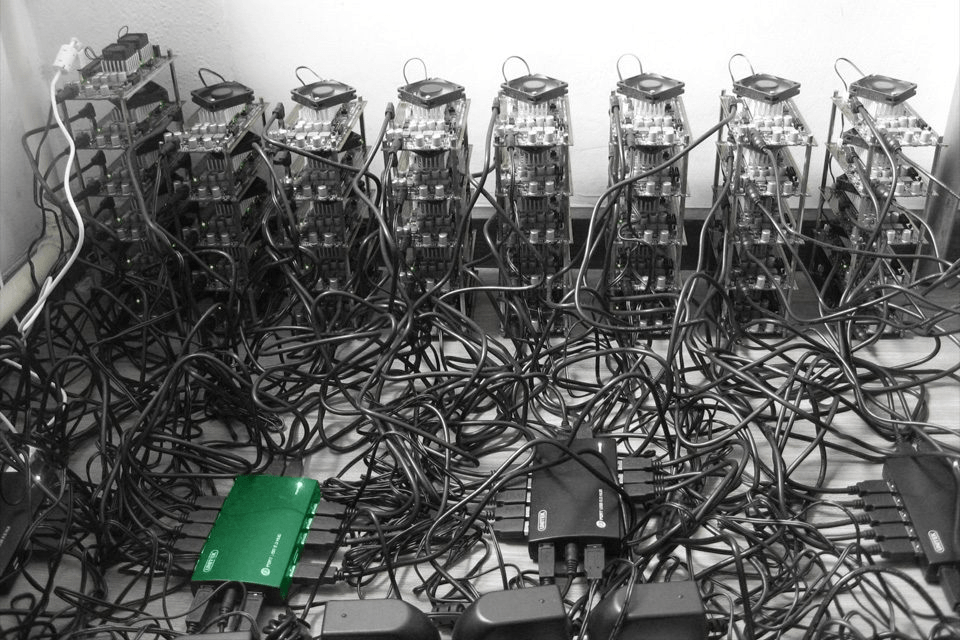

The mining power of ASICs is incomparably greater than the power of a conventional computer that can perform any calculations. In China, Iceland, Singapore and other countries, they began to build huge “farms” from ASIC systems. It is advantageous to locate the farm in a mine underground, because it is cold there. It is even more profitable to build a hydroelectric power station nearby so that electricity is cheaper.

The result of this arms race was that it was completely unjustified to mine bitcoins at home.

Altcoin mining or why video cards disappeared right now

Bitcoin is the first and most popular cryptocurrency. But with the advent of the popularity of cryptocurrencies as a phenomenon, competitors began to appear like mushrooms. Now there are about a hundred alternative cryptocurrencies – the so-called altcoins.

Each altcoin creator does not want to mine his coins at once very difficult and expensive, so he comes up with new criteria for the beauty of blocks. It is desirable that the creation of specialized devices (ASIC) is difficult or delayed as much as possible.

Everything is done so that any fan of this altcoin can take his usual computer, make a tangible contribution to the total power of the network and receive a reward. For “shaking” in this case, a regular video card is used – it just so happened that video cards are well suited for such calculations. Thus, with the help of the availability of the mining process, it is possible to increase the popularity of this altcoin.

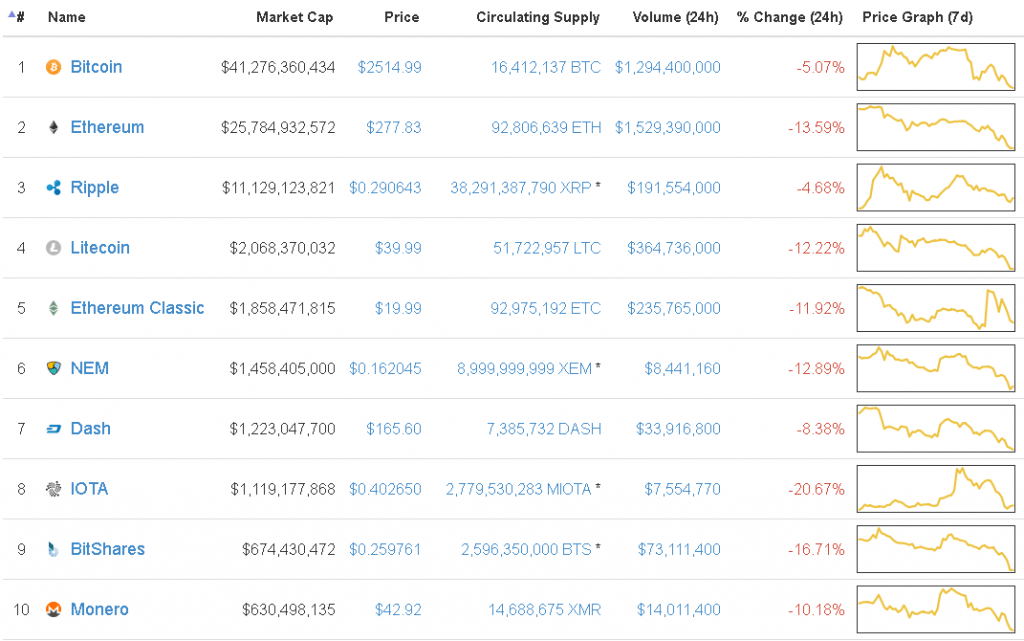

Pay attention to the second line in the table above – Ethereum. This is a relatively new cryptocurrency (appeared in 2015), but with special features. In short, the main innovation of Ethereum is the ability to include in the blockchain not only static information about payments made, but also interactive objects – smart contracts – that work according to programmed rules.

Why this created such a stir, we will discuss in a separate article. For now, it will suffice to say that the new properties of Ethereum have ensured great interest from “crypto-investors” and, as a result, the rapid growth of its exchange price. If at the beginning of 2017 one “ether” cost $8, then by June 1, the rate broke through the $200 mark.

It has become especially profitable to mine Ethereum, which is why miners bought up video cards.

Видеокарта Gigabyte специально для майнинга — сразу без всяких ненужных вещей вроде выхода на монитор.

Источник

What happens if miners stop mining

Suppose that mining has become unprofitable (the profit does not pay off the costs of equipment and electricity), and the miners stop mining or start mining some other currency. What then? Is it true that if miners stop mining, then Bitcoin will stop working or will work too slowly?

No. As we found out above, the blockchain constantly adapts the criteria for the “beauty” of the created blocks so that, on average, the speed of their creation is constant. If there are 10 times fewer miners, the new block will have to “shake” 10 times less, but the blockchain itself will fully perform its functions.

So far, the growth of the exchange rate more than compensates for the drop in rewards, but someday the main profit will come from transfer fees, which the miner also takes. They will not remain without work and without reward.

Conclusion

We figured out what mining really is, why it is needed, who and when it is profitable to mine, where all the video cards have disappeared from the stores, and why some manufacturers now release video cards immediately without going to the monitor.

The material was taken from: https://blog.kaspersky.ru/

Видеокарта Gigabyte специально для майнинга — сразу без всяких ненужных вещей вроде выхода на монитор.

Видеокарта Gigabyte специально для майнинга — сразу без всяких ненужных вещей вроде выхода на монитор.